GPT-2: Crafting Culinary Creativity 👩🍳🥑

In today’s world of AI, the possibilities for innovation are endless, even in the kitchen. In this project I aim to leverage GPT-2 Medium to create a recipe generator and explain how to train and finetune this model to create diverse and unique recipes from a simple list of ingredients. GPT-2 Medium, which has 345 million parameters, is a good middle ground between other models while still providing a decent level of sophistication in generating coherent and contextually appropriate text. I found this model to be manageable in terms of the computational resources required for both training and inference.

More information about this model can be found in the model card from Hugging Face

Text Preprocessing

The data that was used for this project came from Kaggle. The dataset

the data set was reduced to include the following important features:

- recipe_name: The name of the recipe. (String)

- ingredients: A list of ingredients required to make the recipe. (List)

- directions: A list of directions for preparing and cooking the recipe. (List)

Training and Finetuning

The first step to training the model is to convert the csv file into sequential text. This is important because language models like GPT-2 are trained to predict the next word in a sequence, given the previous words. For the model to understand how ingredients relate to the recipe name and directs, the data needs to show examples of how these elements are sequenced together in natural language i.e. as a continuous flow of text, much like how a human would read and understand a recipe. These models are designed to work with plain text data. While a CSV file is structured, it needs to be converted into a format that the model can understand.

It is possible to train the model with high-performance CPU’s, but training was much more manageable with access to a GPU.

# check if GPU is available

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

The model and tokenizer are loaded using the transformers library from Hugging Face

model_name = "gpt2-medium"

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

model = GPT2LMHeadModel.from_pretrained(model_name)

model.to(device)

The tokenizer is responsible for converting raw text into tokens(numbers) that the model can understand.

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

tokenizer.add_special_tokens{"pad_token": "[PAD]"}

Padding tokens are used to ensure that all sequences in a batch are of the same length by filling shorter sequences with the padding token. While not required by GPT2-Medium, having uniform sequence lengths across a batch during training allows for efficient computation as it simplifies the implementation of parallel processing on GPUs. It is important to then resize the model’s token embeddings to match the new size of the tokenizer’s vocabulary. Since the vocabulary size increased due to the addition of the padding token, the model’s embeddings are resized to include the new token.

model.resize_token_embeddings(len(tokenizer))

The data collator is designed to handle the preparation of batches of data. This includes managing padding, masking, and other preprocessing tasks that are required when feeding data into a language model. One of the parameters included is masked language modeling (MLM), which is set to false, therefore indicating that we are using a standard language modeling setup. This parameter specifies whether the collator should create masked tokens (for models like BERT) or not. For GPT-2, this should be set to false because it is not trained using MLM but rather by predicting the next token in a sequence.

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

After playing around with some of the training hyperparameters are I settled on these:

# set the training arguments

training_args = TrainingArguments(

output_dir="./results",

overwrite_output_dir=True,

num_train_epochs=5,

per_device_train_batch_size=2,

save_steps=10000,

save_total_limit=2,

)

Generating Outputs

A simple Flask app was created to read in ingredients from a user, which were then encoded using the tokenizer. The attention mask is used by the model to differentiate between real tokens and padding tokens. During training or inference, the model should only pay attention to the real tokens and ignore the padding tokens.

attention_mask = inputs.ne(tokenizer.pad_token_id).long()

The following hyperparameters were used when generating the recipes:

outputs = model.generate(

inputs,

no_repeat_ngram_size = 2,

attention_mask=attention_mask,

max_length=250,

num_return_sequences=1,

temperature = 0.7, # adjusts randomness

)

no_repeat_ngram_size prevents the model from repeating any n-grams of a certain size. In this case, setting the hyperparameter to 2 ensures that no sequence of 2 tokens will be repeated in the generated text which helps to avoid repetetive outputs.

max_length specifies the maximum length of the generated sequenece.

num_return_sequences specifies the number of generated sequences to return

temperature controls the randomness of the text generation. A lower temperature makes the output more focused and deterministic, while a higher temperature makes the output more random and diverse.

The outputs are then decoded with the tokenizer and sent back through the Flask app.

recipe = tokenizer.decode(outputs[0], skip_special_tokens=True)

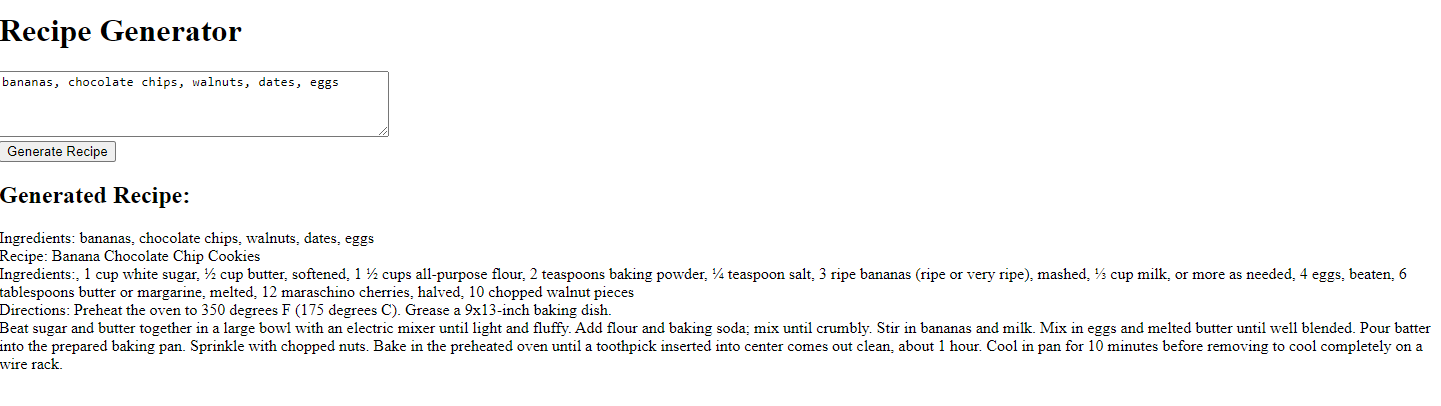

Here is an example of one of the results:

The maraschino cherries are just an extra lil treat 😚🤌

Full code can be found on my github.